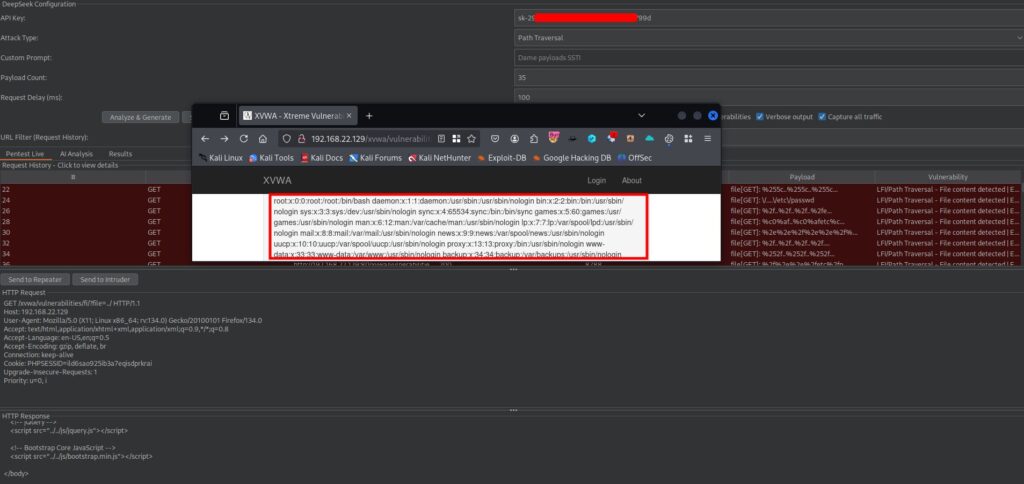

A new security tool has been released: DeepSeek Pentest AI, a Burp Suite extension. It blends generative AI with smart fuzzing. It generates payloads, injects them into requests, and then scores the results. It also stores history and can export results to CSV. The extension integrates with Burp’s Repeater and Intruder tools.

Key features

Here are the main tool’s features:

- Generates payloads for many attack types: SQLi, XSS, RCE, SSRF, LFI, command injection, GraphQL, NoSQL, and more.

- Auto-detects parameters in query strings, post bodies, JSON, XML, multipart forms, and headers.

- Injects payloads into live requests. It compares baseline responses with fuzzed responses. Then it scores severity and confidence.

- Shows real-time metrics and charts. You can view request/response history.

- Lets you export full history or only vulnerabilities. CSV format includes requests, payloads, responses, and evidence.

- Offers a custom prompt field. You can write your own instructions for the AI and get tailored payloads.

The extension aims to automate parts of web app fuzzing. It may save time for routine checks. But automation also raises risks. I will cover those later.

How to install and use (quick steps)

The author (Hernan Rodriguez) published the code on GitHub. The extension uses Python (Jython) and works with Burp Suite Pro and Community. Basic steps:

- Clone the repo from GitHub.

git clone https://github.com/HernanRodriguez1/DeepSeek-Pentest-AI/ - Open Burp Suite → Extender → Extensions → Add. Choose Python and load the

.pyfile from the repo. Check the Output tab for “Plugin initialized”. - Capture or select an HTTP request in Proxy or Repeater.

- Go to the DeepSeek Pentest AI tab. Enter your DeepSeek API key (the extension talks to the DeepSeek API). Choose attack type, number of payloads, and delay. Click Analyze & Generate then Start Pentesting.

- Review results in Pentest Live, AI Analysis, Results, and Metrics. Export as CSV if you want.

These are the core steps. The extension also has an option to redact hosts and avoid sending real parameter values to the AI, which I’ll explain in the privacy section.

The custom prompt: flexible but risky

A standout feature is the Custom Prompt. Instead of choosing a preset attack type, you can write instructions for the AI. The AI will return payloads based on your text. The example in the repo shows how to request Boolean SQLi payloads. This can produce many creative test payloads quickly.

But custom text prompts can be abused. If you prompt the tool poorly, you may get noisy or dangerous payloads. Also prompts can accidentally ask for data transformations that leak secrets. So treat custom prompts as powerful and handle them with care.

Why this matters now

AI is becoming part of security tooling. Automating payload creation and heuristic scoring can speed tests. It can also help testers who lack deep exploit templates. But AI is not a silver bullet. It makes new trade-offs:

- It can speed discovery of certain classes of bugs.

- It might produce false positives or false negatives.

- It can change how testers work, for better or worse.

DeepSeek Pentest AI is one concrete example of this shift. The tool shows both potential and pitfalls.

Accuracy and guardrails

The extension uses heuristic scoring to rank results. Heuristics can help. But they will miss things. They will also flag harmless differences as potential issues. Expect false positives. Expect false negatives.

Use AI outputs as leads. Do not treat them as confirmed vulns. Manually verify findings. Keep proof steps and logs. Use standard exploit methods to confirm severity.

Operational and legal cautions

This tool can send many payloads quickly. That increases risk of causing service outages or triggering rate limits. It can also generate payloads that are aggressive by nature (e.g., command injection attempts). Before running large scans:

- Get explicit written permission.

- Avoid production endpoints unless your scope allows it.

- Start with low-rate fuzzing and monitor system load.

- Keep a human in the loop to stop tests if something goes wrong.

If you are in a regulated environment, check legal and compliance advice before using external AI services in your testing pipeline.

Who might benefit

This extension can help:

- Pentesters who need quick payload ideas.

- Red teams running scenario-driven tests.

- Security teams wanting to augment manual fuzzing.

But it is not a replacement for skilled testing. It is a tool to assist, not to replace human judgment.

Final thoughts

DeepSeek Pentest AI shows how AI can be added to classic tools like Burp. It brings speed and flexibility. It also brings new questions about privacy, accuracy, and supply-chain risk.

This tool only supports DeepSeek AI. It does not support other AI providers like OpenAI, Google Gemini, or Claude. You will need DeepSeek’s API credits to use it.

If you use it, do so with caution. Test in a lab. Verify outputs. Protect sensitive data. And get permission for every engagement.

For more details, see the author’s GitHub and LinkedIn post announcing the release.